- Portfolio News

- 15 July, 2024

It’s hard for anyone to ignore AI right now – the current pace of development and adoption of these new tools is unprecedented.

At Balderton, we have a rich history of investing in companies applying AI (including PhotoRoom, Supernormal, Levity, Wayve, Sophia Genetics and more) and we remain as excited as ever about the opportunities on the horizon.That is why we decided to host the largest UK meet-up for builders in AI in partnership with LangChain – an open source framework for LLM application development and one of the most active open source communities out there. Speakers included Nuno Campos from LangChain, Aymeric from Agemo and Mayo from Sienna AI for an evening of debate and networking exploring the future of LLMs.One of the main topics for the evening was the idea of Autonomous Agents, which is becoming a central theme for the LangChain framework. Last year we wrote a three part series on emerging usecases of LLMs and one of our main focal points was the idea of Actionable LLMs – i.e. models which can generate actions rather than just content. There’s potential for Agents to be a catalyst to this concept going forward – this piece explores why that is, what they are and some hurdles to consider going forward.So what are Agents? Well according to the team who coined the phrase…

The benefits of Agents are similar to why ChatGPT introduced Plug Ins – they connect LLMs with external sources of data and computation including search, APIs, databases, calculators and running code.Due to the iterative feedback loop demonstrated in awesome demos such as AutoGPT and BabyAGI – these Agents can sometimes recover from errors and handle multi-hop tasks. An example of Pseudocode for implementing an agent would be:

- Choose tool to use

- Observe output of the tool

- Repeat until stopping condition met (this could be LLM determined or via hardcoded rules)

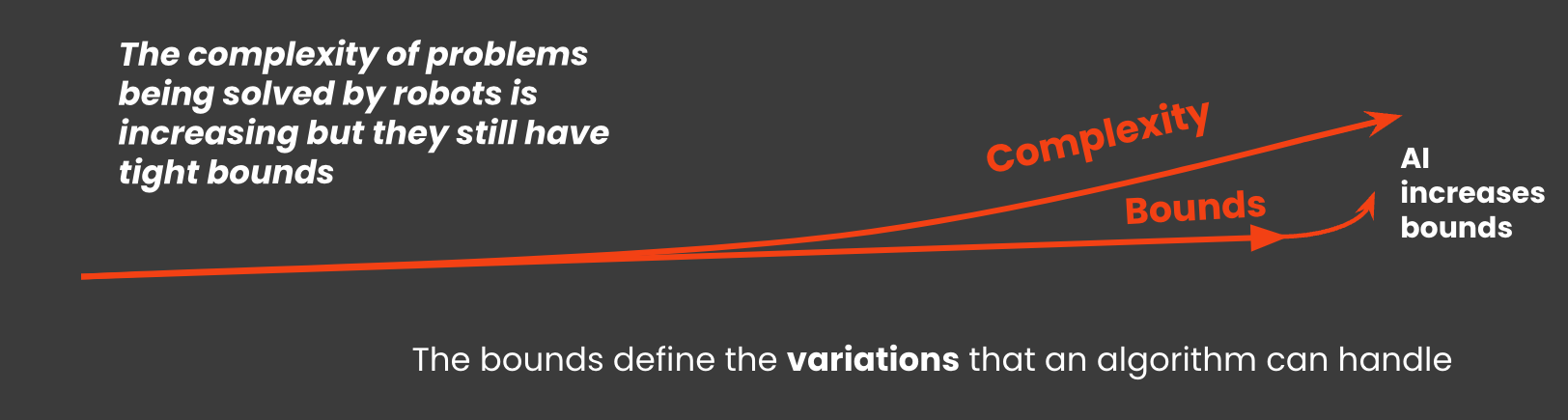

Robotic process automation is by no means a new topic but it’s been given a new lease of life by Agents. The complexity of what RPA has been able to do has steadily increased driven by various layers of abstraction – however the bounds (i.e. how much variance the algorithm can handle) remained tight. The addition of AI is now dramatically increasing the bounds and widening the scope of what can be automated and by whom. The same can be said about physical robots as well, which we explore here.

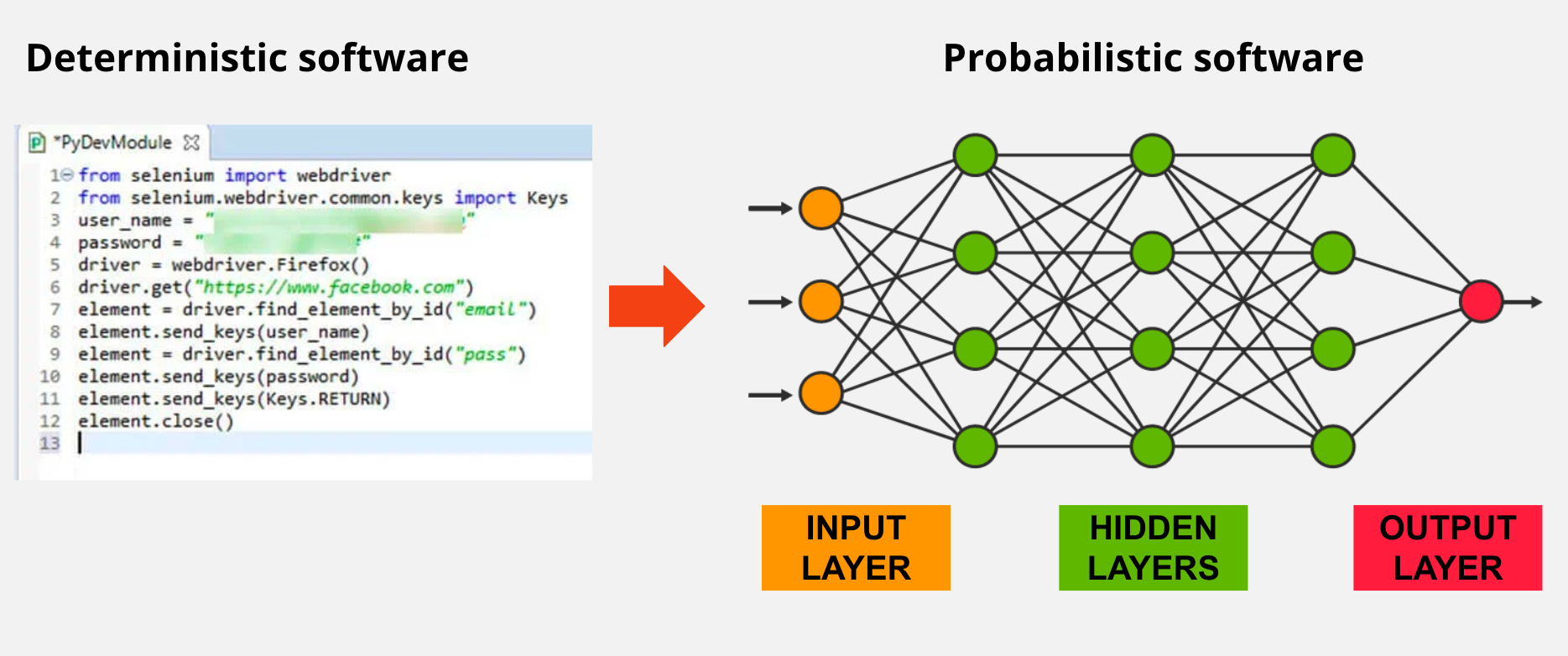

Agents are still novel and therefore come with many limitations, such as high compute costs (due to the no. of iterative LLM queries required to complete a single task), difficulty choosing tools and a lack of reusable memory. However, the main hurdle which we see before large-scale adoption, especially in enterprise, is the shift from deterministic software to probabilistic software.

In “traditional” automation there are defined workflows which will work 100% of the time provided that there are no external errors introduced. However, in probabilistic workflows i.e. where an LLM is the “reasoning engine” there is a level of uncertainty introduced on whether the task will be solved, due to the inherent probabilistic nature of neural networks. Today that uncertainty is quite high and despite the fact that the uncertainty will reduce as models get more accurate, it will always exist. What’s important to also note is that even if the uncertainty can be reduced to 1% – if you begin to chain agents together, this uncertainty compounds to ^n.This means there will have to be a mental shift to probabilistic workflows in which users will have to work with this uncertainty and develop methods to manage it. Some workflows (e.g. in customer support) can already cope with this uncertainty by triaging to a human when needed. However, in other higher-value workflows (e.g. finance) it will require the user to have a deep understanding and be able to quantify that risk vs potential gain, before the agent can be deployed into production. There is also lots of potential for an end layer or “deterministic orchestrator” as coined by Agemo to help ensure certainty of outcome in what these models can do.