Most people associate the phrase “self-driving car” with Google’s ambitious prototype that only vaguely resembles a traditional car. For once, in Google’s version of the future, a steering wheel will no longer be needed. In reality, the universe of autonomous vehicles is much larger than Google, and some early prototypes are already on the streets. Let’s dive into what we really mean when we talk about driverless cars.

What are autonomous vehicles, and when will they be on the road?

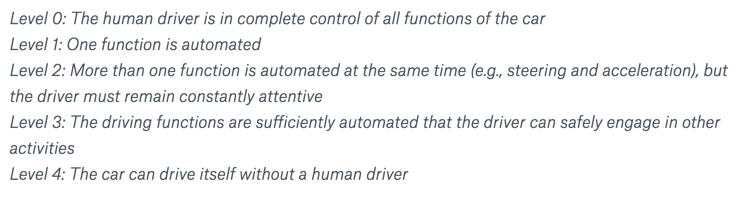

The National Highway Traffic Safety Administration in the US has identified five levels of driving automation, ranging from Level 0 “The human driver is in complete control of all functions of the car” to Level 4 “The car can drive itself without a human driver” and three levels between them. At each subsequent level, more functions get automated, eliminating the need for the driver to perform them. Our current technology is somewhere between Level 3 and Level 4.

Levels of automation as identified by The National Highway Traffic Safety Administration

One of the questions facing companies working in this field is whether or not we can skip Level 3 and go straight from Level 2 to Level 4. Google famously proclaim that this is the route to go.

Level 3, also called “shared driving”, is dangerous because humans aren’t very good at quickly taking control of the vehicle from the computer. Tests run by Audi show that it takes an average of 3 to 7 seconds, and sometimes as long as 10, for a driver to become alert and take control, even with flashing lights and verbal warnings. In emergency situations this is a real hazard.

Most traditional car manufacturers believe that we need to get to Level 3 before cars can become fully autonomous. This approach will allow them to test multiple technologies as well as collect data, which is essential for training the algorithm. It also allows auto manufacturers to sell “conventional” cars for the next couple of years, without a significant change to their business model.

While we’re on the subject of business models: fully autonomous cars will challenge the way value is traditionally captured in the space. Utilisation rates for personal cars are currently around 4–5%. With the high upfront cost of an autonomous vehicle (driven by the cost of sensors and algorithms as well as batteries, since most vehicles at this point will be electric), the route to adoption lays in sharing resources and increasing utilisation rates. Uber, Lyft and other car-sharing businesses are perfectly positioned to take the lead. Following this trend, BMW i Ventures recently made an investment into RideCell, a car sharing service, which now powers BMW’s ReachNow platform in Seattle. On the other side of the globe, nuTonomy, a software and algorithms for self-driving cars company, is planning to launch an autonomous taxi service in Singapore as early as this year.

As more and more functions of a car get automated, the big questions remains, ‘what’s keeping us from full automation?’. There are two main answers: sensor perception, and computers’ ability to make decisions in uncertain conditions.

LIDARS and camera-based vision systems are currently competing with each other for the dominant position in the world of perception sensors. LIDAR, a light-based radar, does not rely on ambient light, which allows it to produce an accurate picture of the world on cloudy days and at night. However, the cost of a LIDAR-based vision system used by Google is currently over $70,000. Cameras, on the other hang, are cheap and can “see” in colour (unlike LIDAR, which “sees” in grayscale), but they consume a lot of computer memory, need night illumination, and can’t determine what they’re looking at as reliably as a radar. While the cost of LIDAR systems is expected to decrease over time, a break-through in computer vision is needed for camera-based sensors to become a viable solution. In reality, a “sensor-fusion” system that combines signals obtained by multiple sensors will likely be needed to make autonomous vehicles reliable, but that is also a computer-vision problem to be solved.

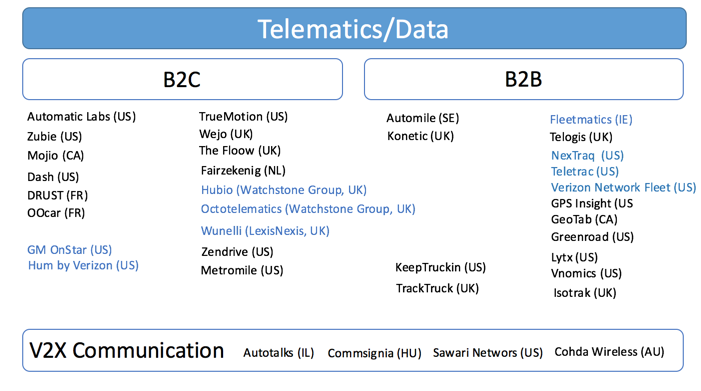

Difference between business and consumers markets

While fully autonomous cars are still somewhat of a distant goal, a lot of innovation is happening in other areas that bring us closer to the ultimate destination. One of those is the self-driving truck industry.

The reason that automating trucks is a more compelling proposition than automating passenger cars is two-fold. First, trucks mainly drive on highways, and recognising obstacles on a highway is an easier problem for algorithms to solve than doing so in the urban environment. Second, fleet operators can already realise significant gains from using telematics and vehicle-to-vehicle communication technology. For over ten years, truck manufacturers have been developing driving-support systems that make “platooning” possible. Using vehicle-to-vehicle communication, a group of vehicles in a platoon follow “the leader”, passing steering, braking and acceleration control to the lead vehicle. This results in improved traffic safety and 5–10% improvement in fuel efficiency. Starting on the 31st of March this year, several truck platoons will drive on public roads across Europe to Rotterdam as part of the first cross-border “platooning challenge” organised by the European Union.

Another area where trucks are ahead of personal cars is telematics. For more than a decade, enterprise fleet managers have been relying on location-based software to track drivers, optimise routes, and improve efficiency. Decreasing the cost of tracking devices allows new entrants to come into the space and compete with the more established players.

On the consumer side, connected car devices can be split into two categories: “concierge services”, such as GM’s OnStar; and “smart driving assistants”, such as Automatics Labs and Zubie. Traditional concierge services provide drivers with safety and security features, an onboard wifi connection, navigation and remote diagnostics systems in exchange for a monthly subscription fee. Drivers should expect to pay anywhere between $19.99 and $34.99 for the service.

Smart driving assistants plug into the car’s on-board diagnostics port and connect to the driver’s phone. This connection allows users to access their driving behaviour data. One advantage over traditional concierge services is the much lower cost for the driver, since smart driving assistants utilise the smartphone’s data connection and don’t require one of their own. In addition to this, there is a developing app ecosystem for those who want to make their cars even smarter. Not surprisingly, the biggest proponents of smart driving assistants are insurance companies. The main challenge of this space is to incentivise drivers to share their data, which can be done with innovative rewards systems or through a compelling value proposition on the side of the apps.

Map of different areas and companies working in each one of them

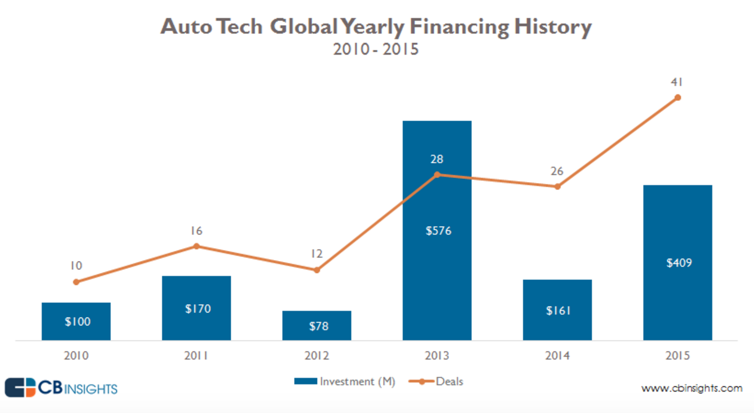

According to CB Insights, last year saw a record number of deals in the Auto Tech space. If autonomous cars become a reality, current technology stack is going to change. Car manufacturers, suppliers, insurance companies and tech giants all realise that a big shift is coming, and they are busy investing into new technologies that promise to get us closer to autonomous vehicles.